In this post, we will look at LRU Cache Implementation. For this implementation, we will use Javascript Linked List and Objects.

However, before actually getting into the details of the implementation, we should first understand what LRU is.

If you are more interested in watching a video then reading, we also have a detailed video about the topic.

1 – What is LRU Cache?

LRU stands for Least Recently Used. In a nutshell, it is a cache eviction policy where we state that when our cache fills up and a new element comes in, we remove the Least Recently Used item from the cache.

Any cache that uses this LRU eviction policy is known as LRU Cache.

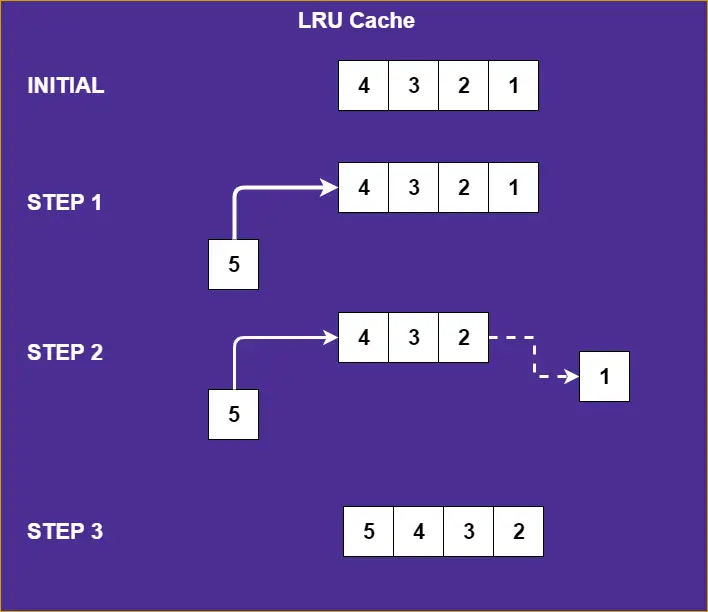

Below is an illustration to describe LRU Cache.

Here, we have a cache of size 4. As an example, it can initially have 4 elements as 4, 3, 2, 1. Now, if a new element (consider 5) is added to the cache, it becomes the most recent element in the cache. Hence, we place it in the beginning.

However, if we do this, we also exceed the size of the cache. So we have to remove an element from the cache. Therefore, we remove the element 1. In other words, the least recently used or accessed element in the cache.

In a nutshell, this is what we mean by LRU Cache.

2 – LRU Cache Implementation

To implement LRU Cache, there are few general rules we have to follow:

- Insertion of a new element in the LRU Cache should be done with time complexity of O(1). In other words, constant time.

- Also, retrieval of an element from the Cache should be with time complexity of O(1).

- When we retrieve an item from the Cache, it should become the most recently used item in the list. In other words, it should be on top of the list.

So what all data-structures can we use for such an implementation?

To start with we can use a List. The ideal choice here would be a Doubly Linked List. Using a doubly linked list will allow us to remove an element from the cache in O(1) time complexity which is very important for us.

We also need a way to access the element in O(1) time complexity. To do so, we need a Map data structure. In Javascript, we can achieve this by using Javascript Objects.

Some of the rules we can use to make it work are as follows:

- Insert a new element at the head of the Doubly Linked List.

- On every read or insert to the cache, the element that is read or inserted is moved to the head of the Linked List.

- If the cache limit is exceeded while inserting, remove the element at the tail of the Linked List.

- Store the key and value of the relation in an object. This allows retrieval in O(1) time complexity.

3 – LRU Cache Implementation Steps

Having set the ground rules, let’s now start our implementation.

3.1 – The Node Class

Node class stores the element in the Linked List.

class Node { constructor(key, value) { this.key = key; this.value = value; this.next = null; this.prev = null; } }

Since we are working with Doubly Linked List, each node will point to the next as well as the previous node. If you wish to read more it, I have a detailed post on Linked List Implementation in Javascript.

3.2 – The LRU Cache Class

This is the main part of our implementation. Basically, this is where we will define the behavior of our LRU Cache.

class LRUCache { constructor() { this.head = null; this.tail = null; this.size = 0; this.maxSize = 4; this.cache = {}; } }

We have the head and tail elements. Then, we have the size attribute to store the current size of the cache. Also, the maxSize property to store the maximum size of the cache. Lastly, we have the cache object. We initialize it to an empty javascript object in the constructor.

3.3 – The Put Element Function

The first function we have to implement is to put a new item into our cache. Below is the implementation for the same:

put(key, value) {

let newNode

// if the key not present in cache

if (this.cache[key] === undefined) newNode = new Node(key, value);

//if we have an empty list

if(this.size === 0) {

this.head = newNode;

this.tail = newNode;

this.size++;

this.cache[key] = newNode;

return this;

}

if (this.size === this.maxSize) {

//remove from cache

delete this.cache[this.tail.key]

//set new tail

this.tail = this.tail.prev;

this.tail.next = null;

this.size--;

}

//add an item to the head

this.head.prev = newNode;

newNode.next = this.head;

this.head = newNode;

this.size++;

//add to cache

this.cache[key] = newNode;

return this;

}

Basically, we check whether the item with the given key is already present. If not, we insert it into the list and the cache object.

Also, if it is the first element in the cache, we make it the head and tail of the list. Otherwise, we make the new node as the head of the list.

Lastly, if we reach the maximum size of the list, we remove the item at the tail of the list.

3.4 – The Get Element Function

The second function is to get an element from the cache. As per our requirement, this should also happen in O(1) time complexity. Below is the implementation for the same.

get(key) {

if (!this.cache[key]){

return undefined

}

let foundNode = this.cache[key];

if(foundNode === this.head) return foundNode;

let previous = foundNode.prev;

let next = foundNode.next;

if (foundNode === this.tail){

previous.next = null;

this.tail = previous;

}else{

previous.next = next;

next.prev = previous;

}

this.head.prev = foundNode;

foundNode.next = this.head;

foundNode.prev = null;

this.head = foundNode;

return foundNode;

}

We use the cache object to find the element with the input key. Since it is an object, we can do the operation in constant time complexity. Now, the important thing is to make the element that was accessed as the first element in the list. In other words, we want to make it the most recently used element.

If it is already the first element, we can simply return it. If that’s not the case, then we have to remove the element from the list and place it as the new head. While removing, we have to connect the element’s previous node with the element’s next node.

Conclusion

With this, we are basically done. Our LRU Cache Implementation is complete. We can test it our by inserting and accessing few elements.

let cache = new LRUCache(); cache.put(1, 'A'); cache.put(2, 'B'); cache.put(3, 'C'); cache.put(4, 'D'); cache.get(2); cache.get(1); cache.put(5, 'E'); cache.put(6, 'F'); cache.get(1); cache.get(8);

If you wish to play around with the code, it is available on this Gist.

Comments/queries are welcome in the comments section below.

2 Comments

Ashwin Kumar · April 27, 2020 at 4:21 am

Hi Saurabh,

First of all, huge kudos for posting such detailed explanations for every datastructure and algorithms. I just wanted to bring out to your notice about an error situation in the LRUCache implementation. Your implementation fails during collision such as key with multiple values.

Saurabh Dashora · April 28, 2020 at 3:06 am

Hi Ashwin,

Thanks for the great feedback.

And yes, I had not handled that condition explicitly because didn’t want to deviate from the actual concept behind LRU Cache.

Will try to put a note about it in the post itself.