Distributed systems are all the rage these days. However, building and operating distributed systems is hard in the best of times. To top it up, we often end up making things even more difficult by ignoring the key challenges associated with distributed systems.

In today’s post, we are going to remedy this problem by looking at 5 major challenges in building distributed systems.

Though our focus would be slightly higher level in this post, it will serve as a great starting point for someone looking to start learning about distributed systems.

1 – What is a Distributed System?

In his important 1994 paper titled Scale in Distributed Systems, the famous researcher B Clifford Neuman describes distributed systems in pretty simple terms:

A distributed system is a collection of computers, connected by a computer network, working together collectively to implement some minimal set of services.

Though this definition is quite accurate, it does not make a few things obvious.

What comes under the scope of a computer?

Does it include only physical devices or even software processes?

To make things clearer, here’s another take on defining a distributed system:

A distributed system is composed of nodes that cooperate to achieve some task.

But what is a node?

Any physical machine (like a computer, phone etc.) or a software process (such as a browser) is a node. These nodes exchange messages over communication links.

Of course, we might not be completely satisfied with the second definition as well. This is because distributed systems can have multiple dimensions depending on your perspective

- From a physical perspective, a distributed system can be thought of as an ensemble of physical machines communicating over network links.

- From a run-time perspective, a distributed system is composed of software processes that communicate via inter-process communication (IPC) mechanisms such as HTTP. These processes are hosted on physical machines.

- From an implementation perspective, a distributed system is a set of loosely-coupled components (known as services) that can be deployed and scaled independently.

These perspective-driven definitions enable us to fit distributed systems in our specific world view whether it is infrastructure, operations or development.

Lastly, there is a rather funny but equally wise definition of distributed systems by the famous computer scientist Leslie Lamport.

A distributed system is one in which the failure of a computer you didn’t even know existed can render your own computer unusable.

2 – Challenges of building Distributed Systems

Let us now look at some of the key challenges of building distributed systems.

2.1 – Communication

Nodes in a distributed system need to communicate with each other.

Even the seemingly simple task of browsing a website in a web browser requires significant amount of communication between different processes.

When we visit a URL, our browser resolves the server address of the URL by communicating with the DNS. Once it gets hold of the actual address, it sends a HTTP request to the server over the network. The server does its own thing and sends a response back over the network.

- How are request and response messages represented over the wire?

- What happens in the case of a network outage?

- How to guarantee security from snooping?

These are just a few of the questions about communication channels facing the designers and developers of a distributed system.

Of course, for many of these communication challenges, abstractions such as TCP and HTTPS have been built. But many times, abstractions can leak and the challenge falls on the developer to handle those situations.

2.2 – Coordination

Failures are an integral part of a distributed system.

For whatever reason, a node within a distributed system can fail due to a fault. Basically, a fault is a component that stops working.

While starting off, you might be optimistic and feel that you can build a fault-free system. But it would be a naive pursuit that is bound to fail.

The famous thought experiment about the two generals problem shows the fallacy of trying to completely eradicate faults.

In this problem, there are two generals (think of them as nodes), each commanding his own army. To capture a particular city, both the generals need to agree on a time to attack jointly. Since the armies are geographically separated, the only way to communicate is by sending a messenger. Unfortunately, these messengers can be captured by the enemy (similar to a network failure).

How can the two generals agree on a time?

One general could propose a time to the other using a messenger and wait for the response.

But what if no response arrives? Was the messenger captured? Could the messenger be injured and taking longer than expected? Should the general send another messenger?

As you can see, the problem is not trivial to solve.

No matter how many messengers are dispatched, neither general can be completely certain that the other army will attack the city at the correct time. Sending more messengers can increase the chances, but it never reaches a 100% guarantee of success.

This is the same in the context of a distributed system. Larger the distributed system, higher the probability of faults in some parts of the system.

The point is that you cannot completely avoid failures in a distributed system.

The challenge of distributed systems is coordinating nodes into a single coherent whole in the presence of failures. A system is considered fault tolerant when it can continue to operate despite the presence of one or more faults.

2.3 – Scalability

A lot of importance is attached to scalability in a distributed system. It also happens to be one of the biggest challenges in building distributed systems.

Scalability is a complex topic and requires a deeper investigation. But, for the time being, we can consider it as a measure of a system’s performance in relation to increasing load.

But how to measure the performance and load of a distributed system?

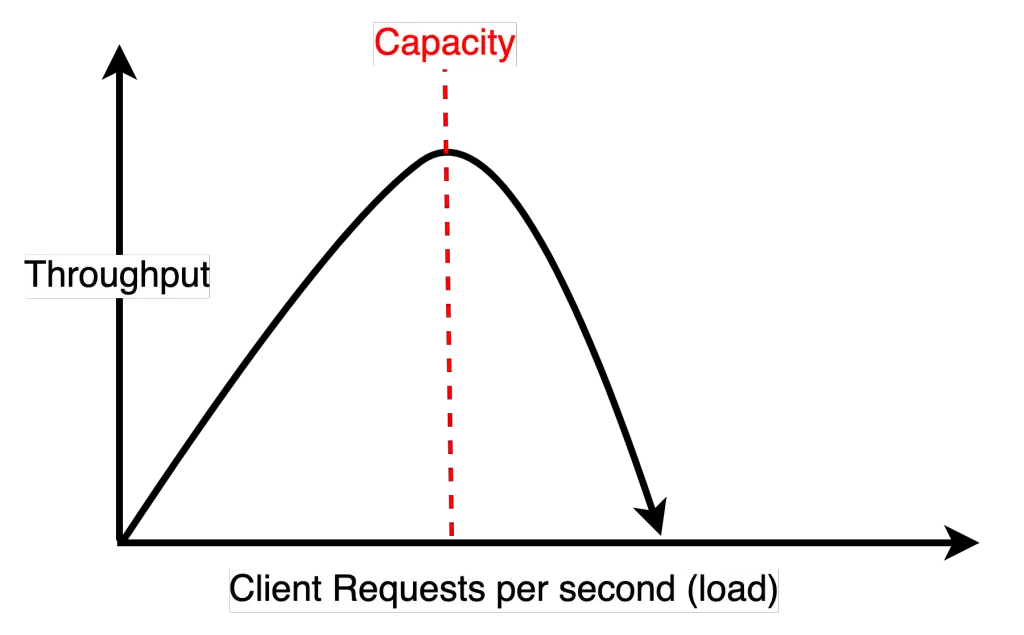

- For performance, we can use two excellent parameters – throughput and response time. Basically, throughput denotes the number of operations processed per second. Response time is the total time elapsed between a client request and response.

- Measuring load, on the other hand, is more specific to the system use case. For example, load can be measured as the number of concurrent users, number of communication links or the ratio of writes to reads.

Performance and load are inherently tied to each other.

Typically, as the load increases, it will eventually reach the system’s capacity. This capacity of a distributed system depends on the overall architecture. More than that, it also depends on a bunch of physical limitations such as:

- Node’s memory size and clock cycle

- Bandwidth and latency of the network links

When the load reaches the capacity, the system’s performance either plateaus or worsens.

If the load on the system continues to grow, it will eventually hit a point where most operations fail or timeout. In other words, throughput or response time fall off a cliff and the system might become practically unusable.

In such a situation, we say that the system is not scalable beyond a certain point.

So how do we make the system scalable?

If load reaching the capacity is the cause of system degradation, it follows that a system can be made scalable by increasing the capacity.

A quick and easy way to increase capacity is buying more expensive hardware with better performance metrics. This approach is also known as *********scaling up.

Though it sounds good on paper, this approach will hit a brick wall sooner or later.

The more sustainable approach is adding more machines to the overall system. This is known as scaling out.

2.4 – Resiliency

As discussed earlier, failures are pretty commonplace in a distributed system. The very nature of distributed systems augments the propensity of failures.

- Firstly, scaling out increases the probability of failure. Since each and every component in the system has an inherent probability of failing, addition of more pieces only compounds the overall chances of failure.

- Second, more components means a higher number of operations. This also means higher the absolute number of failures that can occur.

- Lastly, failures are not independent. Failure of one component increases the probability of failures in other components.

It goes without saying that when a system is operating at scale, any failure that can happen will eventually happen. This dramatically increases the challenges with distributed systems.

A distributed system is considered resilient when it can continue to do its job even when failures occur. An ideal distributed system must embrace failures and behave in a resilient manner.

There are several techniques such as redundancy and self-healing mechanisms that can help increase the resiliency of a system.

However, this is not a zero-sum game.

No distributed system can be a 100% resilient. At some point, failures are going to put a dent in the system’s availability.

Basically, availability is the amount of time the application can serve requests divided by the duration of the period measured.

You might have seen organizations touting their system’s availability in percentage terms. Those percentages use the concept of nines.

Three nines (99.9%) is mostly considered acceptable for a large number of systems. Anything above four nines (99.99%) is highly available. An even higher number of nines may be needed for mission critical systems.

Here’s a handy chart that demonstrates the availability percentages in terms of actual downtime.

| Availability | Downtime per day |

|---|---|

| 90% (one nine) | 2.40 hours |

| 99% (two nines) | 14.40 minutes |

| 99.9% (three nines) | 1.44 minutes |

| 99.99% (four nines) | 8.64 seconds |

| 99.999% (five nines) | 864 milliseconds |

2.5 – Operations

Just like with any software system, distributed systems also need to be tested, deployed and maintained.

Earlier development and operations used to be different teams.

One team developed an application and threw it over the proverbial wall that separated the operations department. The operations team was responsible for operating the application.

However, with the rise of microservices and DevOps, things have changed. The same team that develops and designs a system is also responsible for its live-site operation.

This opens up a bunch of challenges that development teams should be equipped to handle:

- New deployments need to be rolled out continuously in a safe manner

- Systems need to be observable

- Alerts need to be fired when service-level objectives are at a risk of being breached

In a way, this is a good thing as there is no better way to find out where a system falls short than experiencing it by being on-call for it.

Just make sure you get properly paid for ruining your weekends.

Conclusion

To summarize, building distributed systems is not an easy task and is fraught with some significant challenges. The main challenges revolve around the areas of communication, coordination, scalability, resiliency and operations.

Distributed systems come in all shapes and sizes. However, the fundamental challenges in building such systems remain the same. Only the approach to handle these challenges may vary depending on the type of system.

In future posts, we will be taking deep dives into each of these fundamental challenges and how we can handle them while designing a system.

0 Comments